The non-technical AI guy is on fire: He doesn't have an ML degree, but he got an offer from DeepMind

As well as following the 'main line' of learning, he realised that he was lacking in mathematics back in early 2020. So he spent time reading Learning How to Learn, Python Data Science Handbook, Deep Learning and others to accelerate his learning.

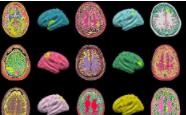

At the same time, it is essential to learn and consolidate the relevant knowledge. Supplementing his knowledge of CNN and its optimisation, implementing vanilla GAN, cGAN (conditional GAN) and DCGAN models ...... The checking of old knowledge and the continuous tiling of new knowledge continue to strengthen Xiao's knowledge base. This is an image of the output of the DCGAN model he trained after learning GAN.

Evolving from a man to a woman (the angle and skin colour also change)

In the process, depending on the difficulty of the knowledge points and taking into account the actual situation, Little Brother will also improve and refine the learning plan. For example, the length of the course is adjusted. Because it was so interesting, he spent more time on NST than the 3 months he had planned.

Or he refines and summarises. When he was learning GAN, he started to write a blog post at the end of each big framework to summarise what he had learnt.

RL is a breakthrough area for DeepMind, and not only is it different from other ML subfields, but there are countless papers and projects that need to be understood, such as AlphaGo, DQN, OpenAI robots and so on.

When faced with this challenge, he started with the part that interested him most - computer vision (CV) - and then moved on to less familiar topics.

In doing so, he did not work behind closed doors, but benefited from regular chats with people such as DeepMind researcher Petar Veličković, one of the best researchers in Graph ML, or readers of blog posts.

Outward output after digestion

Little Brother mentioned that he gave a talk in front of more than 300 colleagues at Microsoft, which was an operation for him to step out of his comfort zone.

Speaking in front of 300 + Microsoft colleagues

There are many attempts to step out of the comfort zone. After keeping his knowledge input, he is also digesting it and exporting it outwards. He has started his own YouTube channel and made several series of videos such as NST and GNN to share his ML learning journey with the internet to help himself think deeper and help others at the same time.

See this image, which is a composite NST image using code written by Little Brother, and you can create it using his GitHub project.

Knowledge of the BERT and GPT families of models is also in his scope of study, and Transformer can help to understand them. He started reading papers on NLP & transformers from scratch. Since he speaks English and German, he has created an English-German machine flip system. He mainly uses OneNote to keep track of his learning journey.

Excerpt from Xiaoxiao's OneNote

On the Microsoft side, he worked on various SE and ML projects, such as developing an eye-tracking algorithm for the eye tracking subsystem on HoloLens 2, and using video coding to add point-of-view rendering to various VR/MR devices.

DeepMind opens its doors to him

In April 2021, he was introduced to a headhunter by Petar. After reviewing his YouTube videos, GitHub codebase and LinkedIn, he was recommended by the headhunter and received an interview with DeepMind.

As he writes this, he emphasises the importance of networking in finding a job and the importance of making quality connections with like-minded people.

He applied for DeepMind's CV, but in his blog post he suggests optimisations to the CV

OTHER NEWS

-

- IDC: China AR / VR market shipments exceed 500,000 units in the first half, consumer market share continues to rise

- By 7 Sep,2022

-

- AI algorithm can detect brain defects and help treat epilepsy disorders

- By 17 Aug,2022

-

- Intel ARM Nvidia pushes draft specification, wants to unify AI data exchange format

- By 19 Sep,2022

-

- Sources say Apple's MR headset will be released in early 2023, and a second, lighter version with calling capabilities will be available in 2024

- By 18 Jul,2022

-

- Google camera against the sky AI night scene photo new technology RawNeRF unveiled: perfect noise reduction, but also synthesize 3D view angle

- By 25 Aug,2022

-

- Study says wearing VR headsets during surgery may reduce anesthetic use

- By 27 Sep,2022